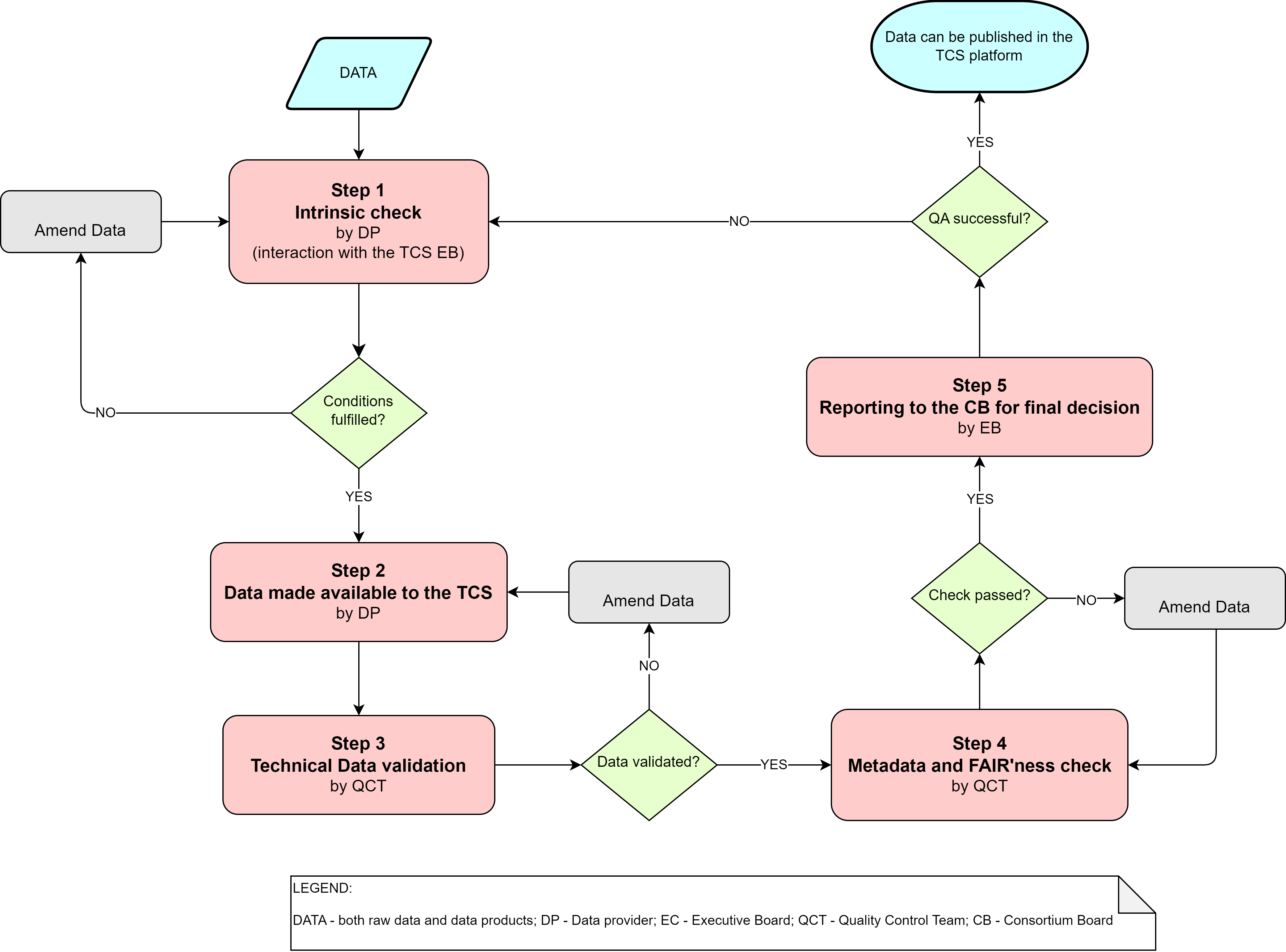

The TCS Tsunami Data quality control is carried out according to the flowchart below.

The TCS Consortium Board may consider distributing new Data proposed by TCS Member(s) or solicited to the community depending on the execution of the TCS work program and based on the principles expressed by the EPOS Data Policy.

The Data Provider prepares Data for distribution, establishes a roadmap toward adopting the FAIR principles, and submits the package to the TCS Executive Board.

The TCS Executive Board appoints a temporary ad-hoc Quality Control Team (QCT) to check and validate the Data ensuring that they conform to commonly used standards and formats and, in general, obey FAIR principles. QCT identifies any problems and inconsistencies and requests Data Provider to amend Data or Metadata. QCT may also seek advice from the Advisory Groups.

Once approved, Data are made available through the TCS portal. Initially, access to the Data could be restricted for the final quality and integrity checks (possibly jointly made by the data provider and the QCT).

After all the final checks, Data can be, if applicable, published in the EPOS ICS-C portal.

* Here, ‘Data’ stands for raw data and data products; a separate flowchart will describe Software Quality Assurance.

This step is implemented by the Data Provider, also toward adopting the FAIR principles. The following questions should be addressed:

- Do the Data address the objectives?

- Which instrument/s was/were used to collect and prepare Data?

- Which are the criteria for including a particular data item in the catalogue/dataset?

- Are source references (e.g., documents from which Data originate) clearly identifiable?

- Have potential Data limitations (e.g., artifacts, bugs) been addressed?

- Has Data Quality already been checked/reviewed?

- Does the Data Provider fully own the Data?

The Data Provider presents Data (referring to the envisaged Service) to the TCS Execution Board. The following question should be addressed:

- Are Data easily available and usable without further intervention from the Data Provider?

This step is implemented by the (temporary) ad-hoc team of experts appointed by the TCS Executing Board (Quality Control Team, QCT) to assess technical aspects of Data publishing quality. The following questions should be addressed:

- Has the community accepted the instruments/methods used to collect and prepare data?

- Has the community approved possible (post-)processing techniques (e.g., filtering)?

- Are Data presented in conventional formats (e.g., OGC for geospatial data)?

- Have files been named consistently?

- Are Metadata available?

Step 4: Metadata and FAIRness check

The QCT again implements this step. Its ultimate goal is the final check before publishing. The following questions should be addressed:

- Are Data comprehensively documented?

- Do Data and metadata exhibit properties that allow FAIRness to be addressed?

- Are Data limitations (e.g., artifacts, bugs) well documented?

- Is the person/team collecting/compiling data clearly identified?

- Is their field of expertise identified?

- Are there any licensing issues or restrictions that need to be addressed before Data publishing?

- When were the Data initially collected/compiled?

- Is there any information on the periodicity of planned Data updates available?

Step 5: Reporting to the Consortium Board

The TCS Executive Board, based on the report of the QCT, provides recommendations for Data publishing to the TCS Consortium Board. The latter takes the final decision based on the answer to the question:

- Do the Data fulfill all Quality Assurance criteria?